I knew about the debate on the dangers of AI but was not quite sure of what these dangers might be. In fact, I was quite naïve. I first became aware of one issue a few weeks ago through personal experience.

I remembered that between 1940 and 1980 there was the scare that the world was entering a new ice age. There was no social media then, so no hysteria, but there were newspaper and TV shows and some scientific articles on the topic. I could not remember much about it myself, so I asked ChatGPT if it could help me out with a few academic articles and newspaper reports.

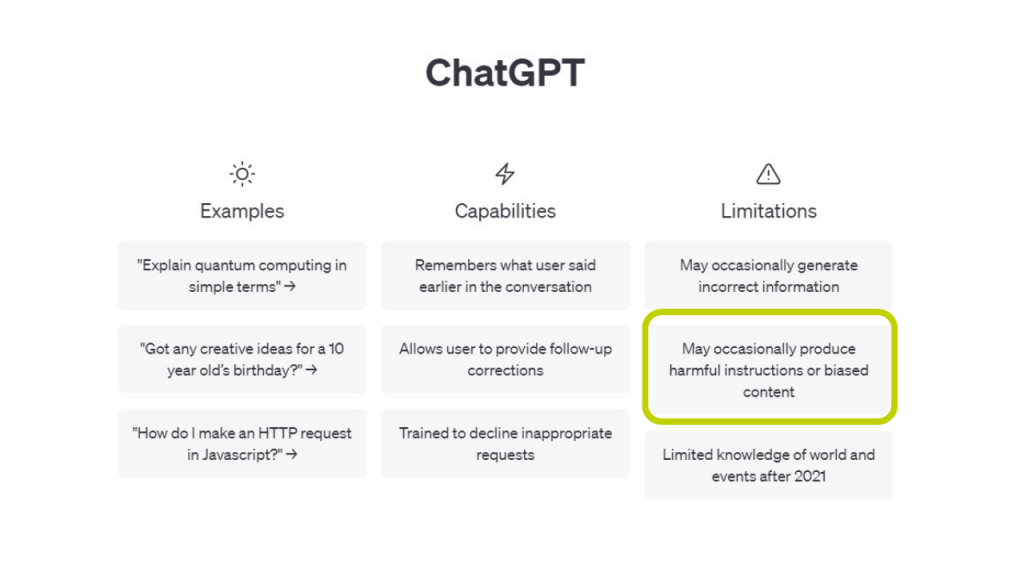

To my astonishment, the algorithm would not tell me! It merely said that there is global warming now and I should read more about it (wake up kiddo). But I knew that there are data on the ‘New Ice Age’ and asked for references on the topic. The obstinate beast then provided four references related to Global Warming. I did not need convincing about Global Warming; I wanted information on something that I know existed. I was absolutely frustrated at being ‘blindsided’ by a biased machine.

I forced the issue by asking about the mini-ice ages from 1400 to today. Bingo! I was told that “from the 1400s to the present, there were several periods characterized by cooler temperatures, often referred to as ‘mini-ice ages’ or ‘little ice ages’.” (Hallelujah, I was not going mad). The machine said that “these were extended periods of cold weather and glacial advances in various parts of the world”. It also said that there are three notable mini-ice ages during that timeframe, namely the Spörer Minimum (1450-1550), Maunder Minimum (1645-1715), and the Dalton Minimum Little Ice Age which extended from 1790 into the 19th century. The Thames froze over in winter for decades in the 1800s and there are plenty of Bruegel and other artists’ paintings of people skating and holding fairs on frozen lakes and rivers in Europe at that time. Nevertheless, I still got a ‘finger wagging’ lecture from ChatGPT that I should wake up and learn about Global Warming. That was not what I had asked or what I was thinking about at all. The AI response was clearly a blatant and blind attempt at controlling human beings’ opinions and beliefs (which I did not need, nor did I like).

I told a colleague about my experiences, and she sent me the following link https://www.dailymail.co.uk/sciencetech/article-11736433/amp/Nine-shocking-replies-highlight-woke-ChatGPTs-inherent-bias.html . I was gobsmacked! I am not the only person who thinks ChatGPT is biased. In fact, the author of the article accuses it of being woke!

I then asked ChatGPT what the inherent biases are in the answers it provides. One was “Analytics often involves the use of algorithms for data processing and decision-making. These algorithms can be biased if they are trained on data that reflects existing social biases or discrimination. As a result, the algorithms may perpetuate or amplify those biases, leading to discriminatory outcomes”.

Now we know; do not blame the algorithm, blame the programmers! Makes sense to me. Thank you for the honesty on this issue. (One should always be polite and use ‘please’ and ‘thank you’ when talking to a machine.)

The source of the article on the inherent bias of big data above also told me that she had been admonished because she had ‘violated the code of ChatGPT’. She was flummoxed as she asked a question on a set of references on a topic in which she was interested. She only wanted more information. Every time she searched; she got the same answer. Violation. You are a bad person! By trial and error, she iteratively removed one of the references at a time from the grouping. Each time she was reprimanded, until she removed an article written by several authors, one of which was a person with the surname Dykes. Bingo. No more violation! No prizes for the political affiliations of the bosses and programmers working on the algorithms.

Be careful out there. It is a dangerous space.

You must be logged in to rate posts.

1 Comments

Leave a Reply

You must be logged in to post a comment.

AI as a whole is still under works BARD and Bing, Google and Bings AI chatbots seem have similar issues. However I am a big fan of the editing capability it’s demonstrated so far.